Two sources of sound, S1 and S2, emitting waves of equal wavelength 20.0 cm, are placed with a separation of 20.0 cm between them. A detector can be moved on a line parallel to S1S2 and at a distance of 20.0 cm from it. Initially, the detector is equidistant from the two sources. Assuming that the waves emitted by the sources are in phase, find the minimum distance through which the detector should be shifted to detect a minimum of sound.

Given:

Wavelength of sound wave ![]()

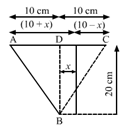

Separation between the two sources AC = 20 cm

Distance of detector from source BD = 20 cm

If the detector is moved through a distance![]() , then the path difference of the sound waves from sources A and C reaching B is given by:

, then the path difference of the sound waves from sources A and C reaching B is given by:

Path difference = AB-BC

![]()

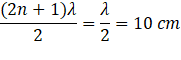

To hear the minimum, this path difference should be equal to:

So,

![]()

On solving, we get, ![]() .

.

Hence, the detector should be shifted by a distance of 12.6 cm.